When we wanted to start blogging for Monkton and Mission Mobility - we could not settle on blogging software we liked. Medium has issues in preventing users from reading your posts. WordPress is a security disaster. Blogger is too expansive.

Our goal wasn't to write an heavy piece of blogging software, but something that was nimble and we could control.

More so, we wanted to leverage Amazon Web Service Lambda - AWS's Serverless Platform as a Service. Our bill for hosting this blog in Lambda, at the high end, will be a crazy $1.05 a month.

AWS Lambda

We have built our Blog leveraging .NET Core 2.1 and AWS Lambda. Lambda enables developers to deploy Serverless code without having to manage infrastructure. From scratch, the blog can deploy in a total of two minutes. Two minutes.

.NET Core has been Montkon's server language of choice since it was created - due to the fact that .NET Core can run within a Linux Docker container, on macOS, oh and Windows. Due to this, .NET Core can be deployed within AWS Lambda with little work.

GitLab - Deploying Serverless resources, automagically

Monkton is a GitLab partner and we just love the technology. We have made a concerted effort to migrate all of our resources from GitHub, Jenkins, and other services to GitLab. GitLab just does it all, from source control to continuous integration and continuous deployment.

With this and a bit of thinking - we are able to rapidly build and rapidly deliver serverless solutions to AWS Lambda with no touch deployments. Monkton’s goal is automation wherever possible.

Developers work on a feature branch. The feature branch performs build and testing with the CI/CD pipeline. Once the developer pulls into the development-master branch and the pull is approved, it builds, tests, and automatically deploys to the test instance of the blog. Finally, once the development-master branch is pulled into master and that pull is approved, it builds, tests, and automatically deploys to the production instance of the blog.

There is simply no user interaction at all to deploy the code. Automation.

To achieve this, we have the .gitlab-ci.yml declaration which defines how we perform all of our CI/CD functions and the lambda-deploy script which does the heavy lifting. These two combined produce our output.

To build and deploy for .NET Core, we do have our own custom Docker image that has the .NET Core SDK and various other tools installed. This Docker image does the dirty work of building, testing, and deploying.

.NET Core Services

AWS has developed a CLI tool for the .NET Core CLI tools that allows you to create and publish projects using Lambda. Your project will need to make a few minor changes to run in Lambda, but they are pretty painless. The biggest step is adding the Lambda entry point into your application. This is a special handler that transforms the Lambda request into a request that your .NET Core application can understand. Other than that, you are pretty much done.

From there, the dotnet CLI tools (the Lambda add on) takes care of the rest. The lambda-publish script below goes into more detail.

Additionally the dotnet CLI tools allow you to create .NET Core projects from scratch with the preconfigured AWS Lambda hooks.

The provisioned .NET Core project comes with a .yaml file for CloudFormation that the Lambda publish hooks use. You can customize this for your specific project. Note, this uses a transform within CloudFormation, so you need to be cautious of what you do within CloudFormation. Our serverless.yaml file below is an example of a working YAML file that we are using to deploy.

The Pieces

To build and deploy with .NET Core and Lambda, you will need to understand all the services you’ll want and need to consume to get started. We use:

- Lambda

- API Gateway

- KMS

- SSM (to store secrets)

- S3 (to store the zip of the build, a bucket to store our blog posts, and a final bucket for our CDN)

- Route53 (for DNS)

- ACM (for certificates)

- CloudFront (to distribute the images, css, and JavaScript files)

- IAM Credentials (access to resources)

AWS API Gateway

API Gateway is AWS means to wrap server calls to backend resources. In this case, it enables a means to forward calls efficiently to AWS Lambda resources.

To access your AWS Lambda functions, you will configure a Route 53 DNS configuration, create an ACM Certificate for your domain, and finally configure a custom domain for API Gateway. Each of these will link together to enable Lambda services to be easily consumed.

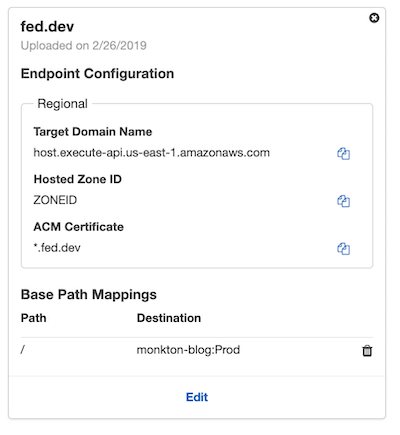

After the function and gateway have been deployed, you can configure a custom domain. You will want to configure this in the manner below. Ensure that you configure the Base Path Mappings to map to / and forward that to your API Gateway and Stage.

Be mindful to set your domain and ACM certificate correctly.

KMS

AWS KMS (Key Management Service) enables you to create asymmetric cryptographic keys - or even bring your own. You can grant permissions to specific resources through IAM to leverage the keys. From managing them to using them to decrypt.

You will want to assign aliases to them, we follow the pattern of /monkton/s3 or /monkton/ssm to define keys for specific resources. You can go a step further and define keys for projects, it depends on what your level of paranoia is.

We will leverage KMS for S3 and SSM within our project. With that, when we create our IAM credentials in CloudFormation, we will define specific access permissions for the IAM role to leverage the KMS keys.

SSM For Secrets

AWS SSM (System Manager) has a component that allows to you leverage KMS keys to store secrets, securely encrypted within AWS. You can then leverage the AWS CLI or even native code to grab the SSM values to do what you need with them. To deploy with the dotnet CLI for Lambda, we will put our IAM credentials and secrets into SSM. The lambda-deploy script below will then grab those credentials from SSM and set them as a component of the deployment parameters.

We do this so we can leverage GitLab’s CI/CD pipeline to deploy apps to Lambda automatically. Our here wasn’t to grant GitLab’s IAM credentials more permissions (in this case the IAM role below) - but to create specific IAM credentials that are tied only to the Lambda deployments. So, we can thus leverage the GitLab IAM credentials to pull from SSM and pass those values on.

To put a value into SSM, we will name it with the prefix gitlab - we do this so when we grant access with IAM, we only do it for SSM values that begin with the prefix.

aws ssm put-parameter --name gitlab-serverless-iam-name --description "gitlab-serverless-iam-name" --value ":value:" --type SecureString --key-id "alias/monkton/ssm" --overwrite --region us-east-1

S3 for Storage

Amazon’s S3 (Simple Storage Service) allows you to store everything from simple text files to gigabyte sized files with ease. Additionally, you can leverage KMS to protect files - ensuring that the contents of the files are encrypted while at rest.

We have three separate S3 buckets, when deploying with Lambda, the code will be uploaded to the S3 bucket so Lambda can deploy from it, to this end we reuse the same bucket for all of our Lambda projects, but put a prefix for the separate projects. We have a bucket for our blog MD files that the blog will pull from. Finally there is an S3 bucket which we will leverage as our CDN content source, which will be described later.

With S3, we do need to add some permissions to enable Lambda and CloudFront to properly pull from the buckets...

S3 CDN Configuration

You will need to make the files public within this bucket to enable access via the CDN.

Depending on how you want to manipulate cache control for your CDN, you may want to add metadata to define the Cache-Control header and set it to max-age=1314000 or whatever your preferred cache duration is.

Finally, for our CDN, we will need to add a CORS configuration to allow access from the CDN. You can refine these policies as needed.

<?xml version="1.0" encoding="UTF-8"?>

<CORSConfiguration xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<CORSRule>

<AllowedOrigin>*</AllowedOrigin>

<AllowedMethod>GET</AllowedMethod>

<MaxAgeSeconds>1800</MaxAgeSeconds>

<AllowedHeader>*</AllowedHeader>

</CORSRule>

</CORSConfiguration>

S3 Lambda Code Configuration

For our code bucket, we need to add a special configuration to enable Lambda to deploy the code.

{

"Version": "2012-10-17",

"Id": "Lambda access bucket policy",

"Statement": [

{

"Sid": "All on objects in bucket lambda",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::ACCOUNT_ID:root"

},

"Action": "s3:*",

"Resource": "arn:aws:s3:::BUCKET_NAME/*"

},

{

"Sid": "All on bucket by lambda",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::ACCOUNT_ID:root"

},

"Action": "s3:*",

"Resource": "arn:aws:s3:::BUCKET_NAME"

}

]

}

S3 Markdown sources

Voila, nothing special.

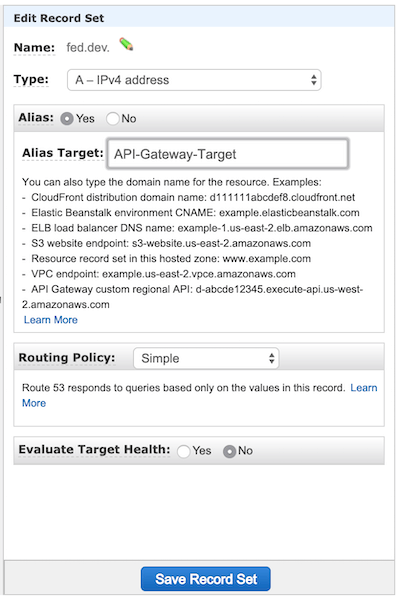

Route53

Route53 is rather simple, it is a DNS service that allows you to configure DNS entries. Once the API Gateway has been deployed, you will add the entry for your domain here, you will need to configure the following for your zone for your domain:

ACM

ACM is Amazon’s certificate management service. You can create certificates within AWS leveraging their certificate authority or you can upload existing certificates.

Once you have uploaded your existing or created a new certificate, you will be able to leverage it within API Gateway to setup DNS routing.

We will leverage ACM for API Gateway and CloudFront.

CloudFront

CloudFront is AWS global content delivery network. We leverage CloudFront to distribute our static resources for our blog. Be it images, JavaScript, or CSS, it will be delivered from CloudFront. We do this for a few reasons. The goal with Lambda should be performing compute in Lambda and that is it. Delivering static resources is a sub optimal way to leverage Serverless computing. Additionally, there are caveats we won’t get into about delivering non-text resources via Lambda and API Gateway.

You will need to configure CloudFront following these directions: Directions Here

Configuring IAM Credentials for GitLab

To deploy the Lambda functions to be updated automatically via GitLab, we will need to create a custom IAM credential with specific inline permissions to necessary resources. We will then store the IAM key and secret in AWS SSM so they can be automatically pulled via the GitLab CI/CD server. We have a bash script that will pull the values from SSM and pass them to the deployment service.

There are five services we need access to:

- S3

- IAM

- Lambda

- API Gateway

- CloudFormation

Defining the IAM Policy below, some notes. We have attempted to constrain the resources. The scope for IAM, CloudFormation, and API Gateway need to be refined.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::monkton-blog-serverless-code",

"arn:aws:s3:::monkton-blog-serverless-code/*",

"arn:aws:s3:::monkton-blog-test-serverless-code",

"arn:aws:s3:::monkton-blog-test-serverless-code/*"

]

}

]

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"lambda:CreateFunction",

"lambda:UpdateFunctionCode",

"lambda:UpdateFunctionConfiguration",

"lambda:GetFunction",

"lambda:DeleteAlias",

"lambda:GetFunctionConfiguration",

"lambda:DeleteFunction",

"lambda:GetAlias",

"lambda:UpdateAlias",

"lambda:CreateAlias",

"lambda:AddPermission"

],

"Resource": "arn:aws:lambda:us-east-1:ACCOUNT_ID:function:monkton-blog*"

}

]

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"iam:GetRole",

"iam:DeleteRolePolicy",

"iam:PutRolePolicy",

"iam:PassRole",

"iam:CreateRole",

"iam:DeleteRole"

],

"Resource": "*"

}

]

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"cloudformation:*"

],

"Resource": "*"

}

]

}

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "apigateway:*",

"Resource": "arn:aws:apigateway:us-east-1::*"

}

]

}

lambda-publish.sh

To consume the credentials, we see the script below. The USERNAME and SECRET are pulled directly from the AWS SSM commands and passed to the dotnet lambda deploy-serverless command to execute and deploy the .NET Core server less solution.

#!/bin/sh

set -e

dotnet restore

STACK_NAME=$1

S3_BUCKEY="bucket-name"

AWS_REGION="us-east-1"

export PATH="$PATH:/root/.dotnet/tools"

# Grab the credentials

USERNAME=$(aws ssm get-parameter --region us-east-1 --name gitlab-serverless-iam-name --with-decryption --query Parameter.Value --output text)

SECRET=$(aws ssm get-parameter --region us-east-1 --name gitlab-serverless-iam-secret --with-decryption --query Parameter.Value --output text)

# Deploy with Lambda

dotnet lambda deploy-serverless \

--stack-name $STACK_NAME \

--s3-bucket $S3_BUCKEY \

--s3-prefix $STACK_NAME/ \

--region $AWS_REGION \

--aws-access-key-id "$USERNAME" \

--aws-secret-key "$SECRET"

.gitlab-ci.yml

# Defines our .NET Core docker image

image: monkton/gitlab-runner-dotnetcore:2.1.5.20190226

stages:

- test

- build

- deploy

test:

stage: test

tags:

- dotnet-docker

script:

- dotnet build

# Perform a build, in theory this should fail when tests fail to build right

# we will build both development and release...

build:

stage: build

tags:

- dotnet-docker

script:

- dotnet build

# Build the development branch, without deployment to docker, and

# don't update the build number

feature:

stage: deploy

only:

- /^feature\/.*$/

tags:

- dotnet-docker

script:

- ./lambda-publish.sh monkton-blog-test

# Build the development branch, with deployment to docker, and

# update the build number

developmentmaster:

stage: deploy

only:

- development-master

tags:

- dotnet-docker

script:

- ./lambda-publish.sh monkton-blog-test

# Build the release branch, with deployment to docker, and

# update the build number

master:

stage: deploy

only:

- master

tags:

- dotnet-docker

script:

- ./lambda-publish.sh monkton-blog

serverless.yaml

---

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::Serverless-2016-10-31

Description: An AWS Serverless Application that uses the ASP.NET Core framework running in Amazon Lambda.

Parameters:

ShouldCreateBucket:

Type: String

AllowedValues:

- 'true'

- 'false'

Description: If true then the S3 bucket that will be proxied will be created with the CloudFormation stack.

BucketName:

Type: String

Description: Name of S3 bucket that will be proxied. If left blank a name will be generated.

MinLength: '0'

BlogS3Bucket:

Type: String

Description: S3 Bucket to access data from

MinLength: '0'

Default: bucket-name

parAppFormationS3KMSId:

Type: String

Description: KMS Key ARN for S3

AllowedPattern : '.+'

Default: arn:aws:kms:us-east-1:ACCOUNT_ID:key/ARN_GUID

parAppFormationSSMPrefix:

Type: String

Description: SSM Prefix for Microservices

AllowedPattern : '.+'

Default: monktonblog

parAppFormationSSMKMSId:

Type: String

Description: KMS Key ARN for S3

AllowedPattern : '.+'

Default: arn:aws:kms:us-east-1:ACCOUNT_ID:key/ARN_GUID

Conditions:

MicroservicesIsInGovCloudCondition:

Fn::Equals:

- Ref: "AWS::Region"

- 'us-gov-west-1'

CreateS3Bucket:

Fn::Equals:

- Ref: ShouldCreateBucket

- 'true'

BucketNameGenerated:

Fn::Equals:

- Ref: BucketName

- ''

Resources:

#######################################################

#################### S3 IAM ROLES ####################

#######################################################

LambdaAppRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action:

- sts:AssumeRole

Path: "/"

Policies:

- PolicyName: lambda-default-customized

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- cloudwatch:*

- events:*

- iam:ListAttachedRolePolicies

- iam:ListRolePolicies

- iam:ListRoles

- iam:PassRole

- lambda:*

- logs:*

- sns:ListSubscriptions

- sns:ListSubscriptionsByTopic

- sns:ListTopics

- sns:Subscribe

- sns:Unsubscribe

Resource: "*"

- PolicyName: lambda-get-s3-config

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- s3:GetObject

Resource:

Fn::Join:

- ''

- - 'arn:'

- Fn::If:

- MicroservicesIsInGovCloudCondition

- 'aws-us-gov'

- 'aws'

- ':s3:::'

- !Ref BlogS3Bucket

- '/*'

- PolicyName: lambda-kms-access-s3

PolicyDocument:

Statement:

- Effect: Allow

Action:

- kms:Decrypt

- kms:Encrypt

Resource: !Ref parAppFormationS3KMSId

- PolicyName: lambda-kms-access-ssm

PolicyDocument:

Statement:

- Effect: Allow

Action:

- kms:Decrypt

Resource: !Ref parAppFormationSSMKMSId

- PolicyName: lambda-ssm-access

PolicyDocument:

Statement:

- Effect: Allow

Action:

- ssm:GetParameter

Resource:

Fn::Join:

- ''

- - 'arn:'

- Fn::If:

- MicroservicesIsInGovCloudCondition

- 'aws-us-gov'

- 'aws'

- ':ssm:'

- Ref: "AWS::Region"

- ':'

- Ref: "AWS::AccountId"

- ':parameter/'

- !Ref parAppFormationSSMPrefix

- '*'

# https://github.com/awslabs/serverless-application-model/blob/master/versions/2016-10-31.md#event-source-object

LambdaAppDotNetCoreFunction:

Type: AWS::Serverless::Function

Properties:

Handler: Monkton.Blog::Monkton.Blog.LambdaEntryPoint::FunctionHandlerAsync

Runtime: dotnetcore2.1

CodeUri: ''

MemorySize: 256

Timeout: 30

Role: !GetAtt LambdaAppRole.Arn

Environment:

Variables:

APP_REGION: !Ref "AWS::Region"

BLOG_CONTENT_BUCKET: !Ref BlogS3Bucket

Events:

PutResource:

Type: Api

Properties:

Path: "/{proxy+}"

Method: ANY

RootResource:

Type: Api

Properties:

Path: "/"

Method: GET

Outputs:

ApiURL:

Description: API endpoint URL for Prod environment

Value:

Fn::Sub: https://${ServerlessRestApi}.execute-api.${AWS::Region}.amazonaws.com/Prod/